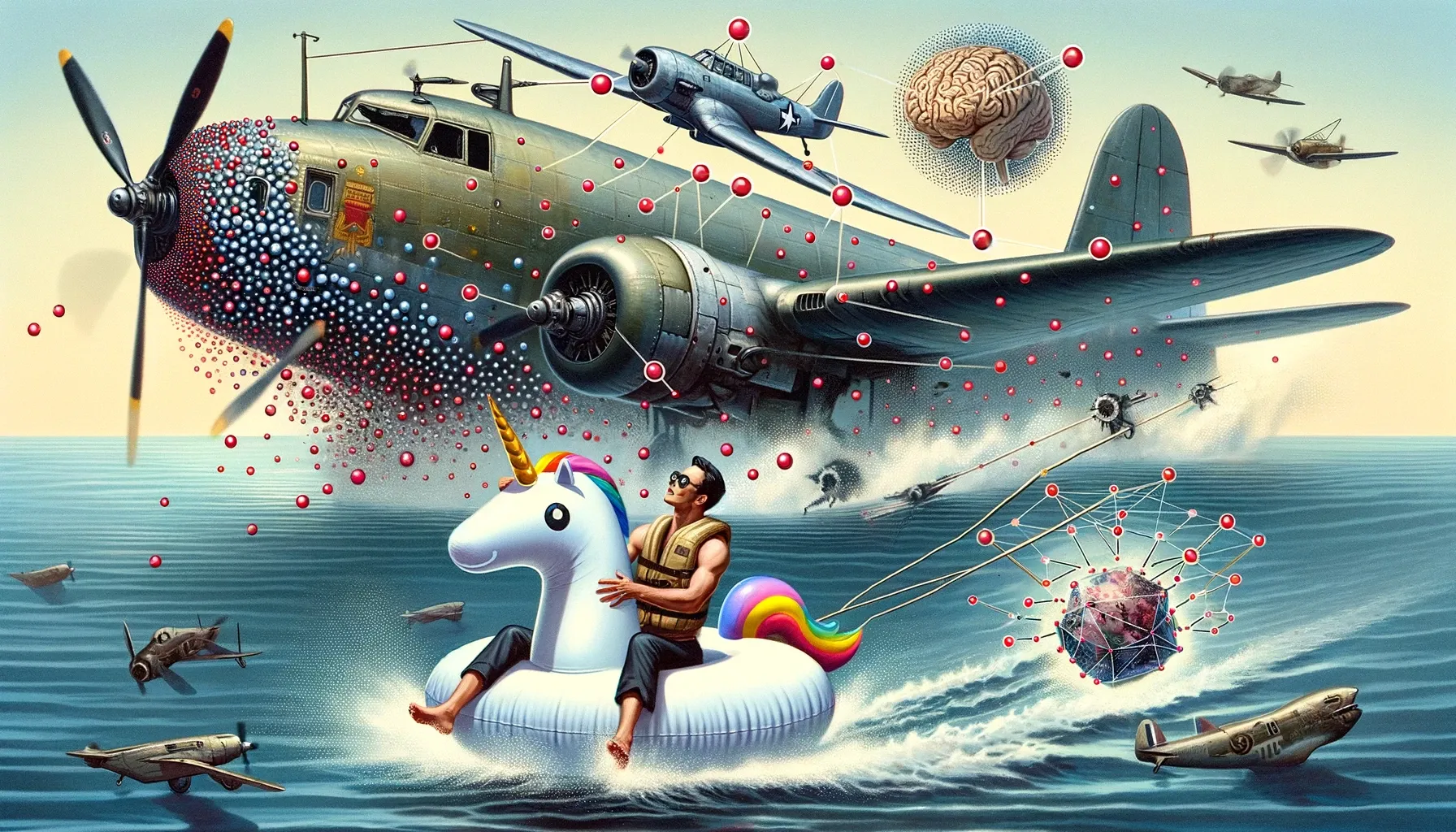

AI Game-Changers, Survivorship Bias, and Shark Story you wouldn't believe if it wasn't on video

Discover OpenAI's Sora and Google's Gemini 1.5, revolutionizing video generation, alongside a WWII lesson in survivorship bias and a thrilling shark encounter story.

Sora appeared, and overshadowed Gemini 1.5.

Sora is a giant leap in text-to-video and image-to-video generation.

Gemini 1.5 could be the greatest increase in capabilities since GPT-4.

So I'll cover both in Thing 3. 🤓

But first...

Thing 1 - Bullet holes and Survivorship bias

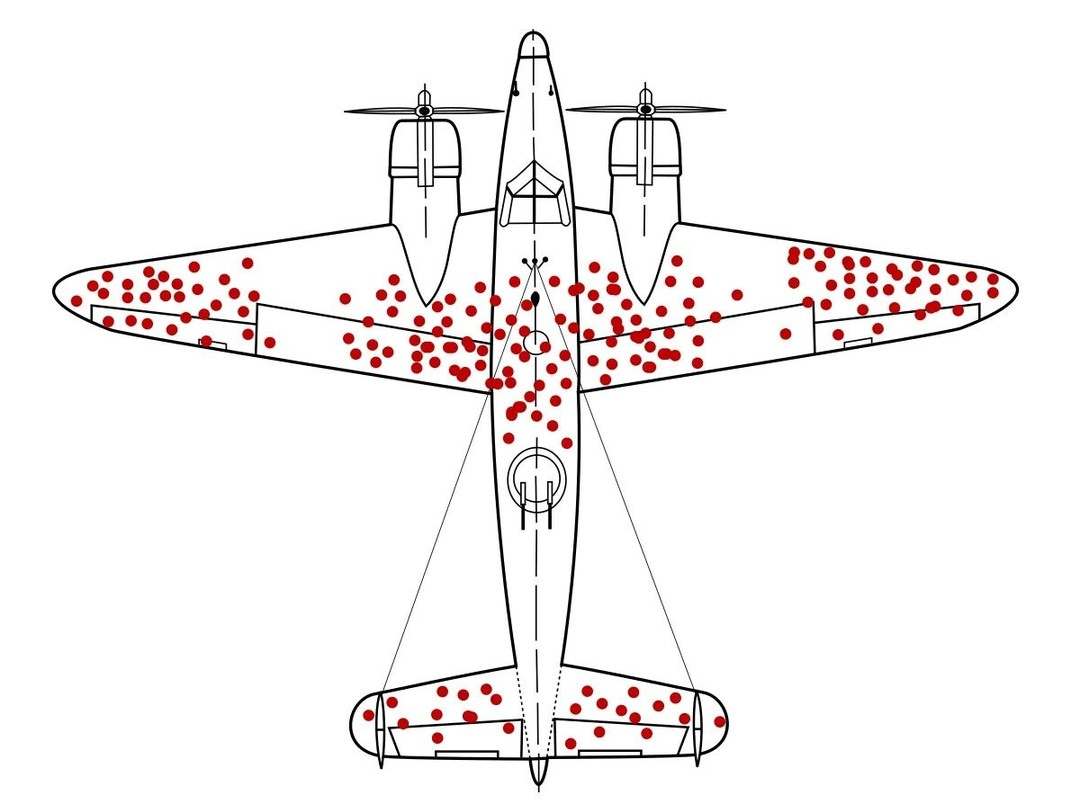

The story behind this image is an example of survivorship bias. I'll explain.

This is the World War II-era aircraft, the Lockheed PV-1 Ventura.

During World War II, the military wanted to reinforce their aircraft to reduce the number of planes being shot down.

They analyzed returning planes and marked where they mostly got hit by enemy fire, as shown by the red dots.

The first instinct was to reinforce those areas. But that was wrong.

Why?

A statistician named Abraham Wald made critical observations:

- The military was looking just at the planes that made it back.

- They had no data on the ones that were shot down.

Wald suggested that the areas without red dots were the critical ones to reinforce. Those areas on the planes that didn’t return were likely hit, causing them to be lost.

This led to reinforcing those unmarked areas, saving countless aircraft and lives in subsequent battles.

Survivorship bias - that this is an example of - is when we look at the winners or those who 'survived' a challenge and ignore the losers or those who didn't make it.

This can make us think that success is more common than it is, because we're not seeing the whole picture.

Thing 2 - Florida man and the fish...

Just a dude, on a unicorn floaty that had a hole, getting dragged miles from shore by a 10 foot shark he caught with his fishing rod.

To have the attitude, commitment, and "going with the flow" of this Florida man...

Here's the full video in case you wanna watch.

Thing 3 - This week in AI

OpenAI Sora

OpenAI's Sora is an advanced text-to-video model that can generate realistic and imaginative scenes from text instructions, producing high-quality videos up to one minute long.

It learned to act as a world simulator. It can generate videos with 3D consistency, long-range coherence, and object permanence, demonstrating an apparent understanding of 3D spaces.

Unlike any other model so far, Sora can simulate actions that affect the state of the world, such as a painter leaving strokes on a canvas that persist over time or a person eating a burger and leaving bite marks.

It can also simulate artificial processes, such as controlling a player in Minecraft. These capabilities emerge without explicit programming for 3D or objects because the model is so freakin' large!

Google Gemini 1.5

Gemini 1.5 builds upon Google's Transformer and MoE (mixture-of-experts) architecture.

The largest context it supports is 1 MILLION tokens (GPT-4 has 128k and Claude 2.1 has 200k for comparison). And it can use them like a champ!

Here's a mindblowing example:

Gemini 1.5 learned to translate from English to Kalamang by reading a significant amount of linguistic documentation, a dictionary, and about 400 parallel sentences, along with the context in which they apply.

Despite Kalamang being a language with limited online presence and fewer than 200 speakers.

Other AI news this week

- Meta released MAGNeT, a single non-autoregressive transformer model for text-to-music and text-to-sound generation

- OpenAI started testing ChatGPT long-term memory

- NVIDIA introduced Chat with RTX - a localized custom chatbot that runs on NVIDIA RTX

- ElevenLabs announced sound effects (early access) - this pairs so well with Sora...

How's that for a week?

Cheers, Zvonimir